Overview

CLLT conducts research on Large Language Models (LLMs) across multiple dimensions: from understanding their reasoning capabilities and limitations, to developing applications for classical texts and dialectal varieties, to engaging with the broader public about AI and language technology.

Our work spans theoretical investigation of LLM behavior, practical applications in digital humanities and NLP, and public outreach to make AI accessible to non-technical audiences.

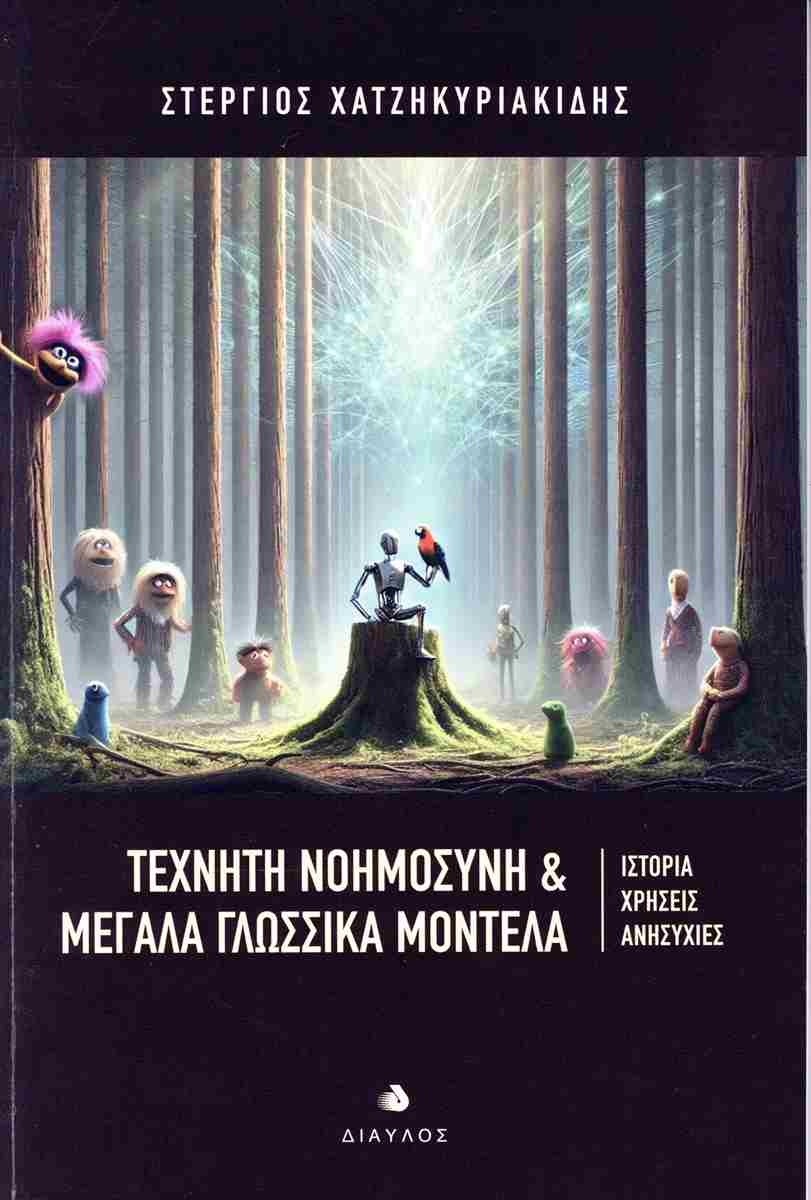

Public Engagement: Bringing LLMs to the General Public

Artificial Intelligence and Large Language Models

A comprehensive, public-facing book in Greek that explains artificial intelligence and large language models to a general audience. This work bridges the gap between cutting-edge AI research and public understanding, covering the history, uses, and concerns surrounding modern language technology.

The book makes complex AI concepts accessible, helping readers understand how LLMs work, their capabilities and limitations, and their impact on society.

Thucydides Goes Ragging: Event Extraction from Classical Texts

RAG-Enhanced Event Knowledge Extraction

Applying Large Language Models with Retrieval-Augmented Generation (RAG) to extract event knowledge from Thucydides' historical texts. This work demonstrates how modern LLMs can be combined with symbolic reasoning to extract structured knowledge from classical Greek literature.

The "Thucydides Goes Ragging" project showcases LLM capabilities in understanding complex historical narratives and extracting structured event information, combining neural language understanding with formal knowledge representation.

MEDEA Platform

MEDEA-NEUMOUSA

Advanced platform for computational analysis of ancient Greek texts, leveraging Large Language Models for knowledge graph extraction and neuro-symbolic reasoning. MEDEA combines the power of modern LLMs with symbolic reasoning to enable sophisticated analysis of classical literature.

Key Features:

- LLM-powered entity recognition and relationship extraction

- Knowledge graph construction from classical texts

- Semantic search across ancient Greek corpora

- Integration of neural and symbolic methods

- Tools for philological research and annotation

Dialectal Llama-krikri: Fine-tuning for Greek Dialects

Specialized LLM models fine-tuned for Greek dialectal varieties

Cretan Dialect

Fine-tuned Llama model for Cretan Greek

Cypriot Dialect

Specialized model for Cypriot Greek

Northern Greek Dialect

Model trained on Northern Greek varieties

Pontic Dialect

Model for Pontic Greek

Fine-tuning Large Language Models (specifically Llama) for Greek dialectal varieties enables NLP applications for low-resource language varieties. These models are trained on the GRDD dataset and demonstrate the power of LLM fine-tuning for under-resourced languages.

Source Code

GitHub RepositoryResearch Directions

Our LLM research continues to explore new frontiers in language understanding, from evaluating reasoning capabilities to developing applications for classical texts and low-resource languages. We combine theoretical investigation with practical applications, always with an eye toward making AI more accessible and useful for both researchers and the general public.