Our Approach

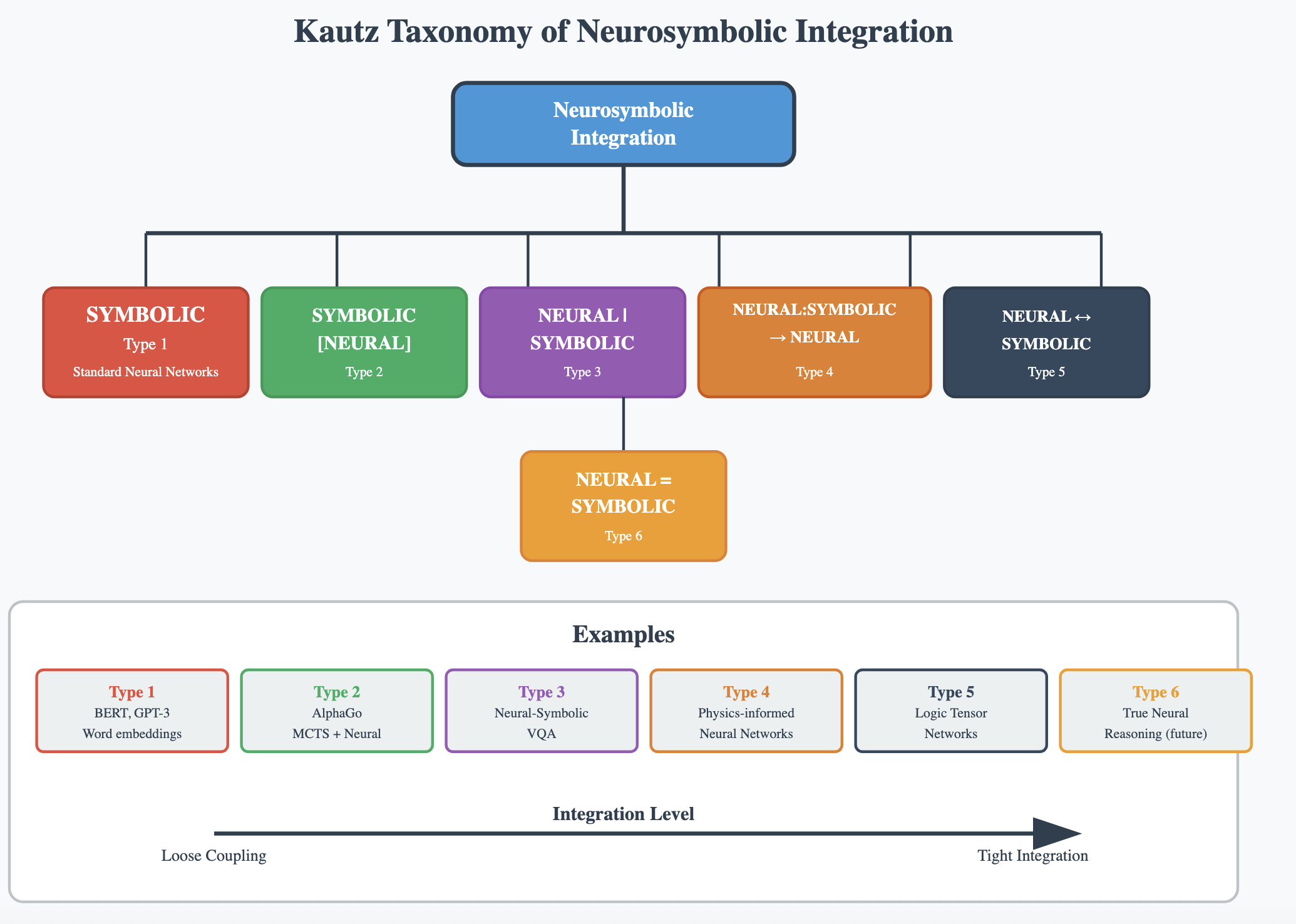

CLLT's work on natural language inference (NLI) embraces both neural and symbolic approaches, recognizing the strengths of each paradigm. We develop neural models for their scalability and robustness, while integrating symbolic reasoning for interpretability and precision. Our research aims at neural-symbolic (NeSy) integration, combining the best of both worlds.

We investigate fundamental questions about reasoning capabilities in NLI systems, examining how models handle complex inference patterns, the role of linguistic structure, and the impact of surface-level features like punctuation on inference judgments. Our work spans from theoretical investigations of meta-inferential properties to practical systems that leverage both neural architectures and formal semantics.

Key Research Areas

Reasoning Capabilities

Investigating the meta-inferential properties of NLI systems, understanding what kinds of reasoning patterns models can and cannot handle, and developing methods to improve reasoning capabilities.

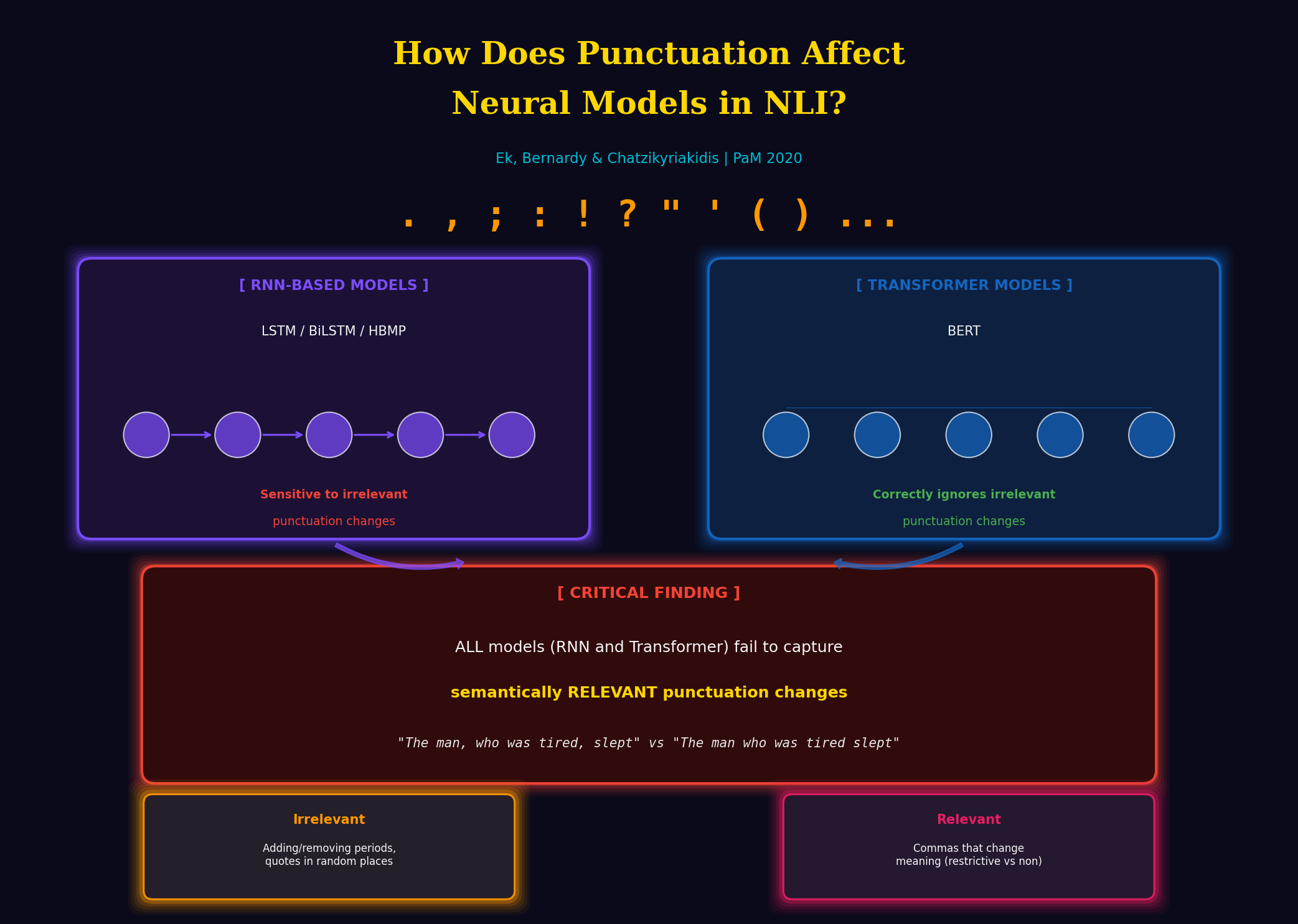

Punctuation Effects

Studying how punctuation marks affect NLI judgments, revealing important insights about how neural models process linguistic structure and surface-level features.

Neural-Symbolic Integration

Developing hybrid systems that combine neural models with symbolic reasoning, leveraging RAG (Retrieval-Augmented Generation) and proof assistants for enhanced inference capabilities.

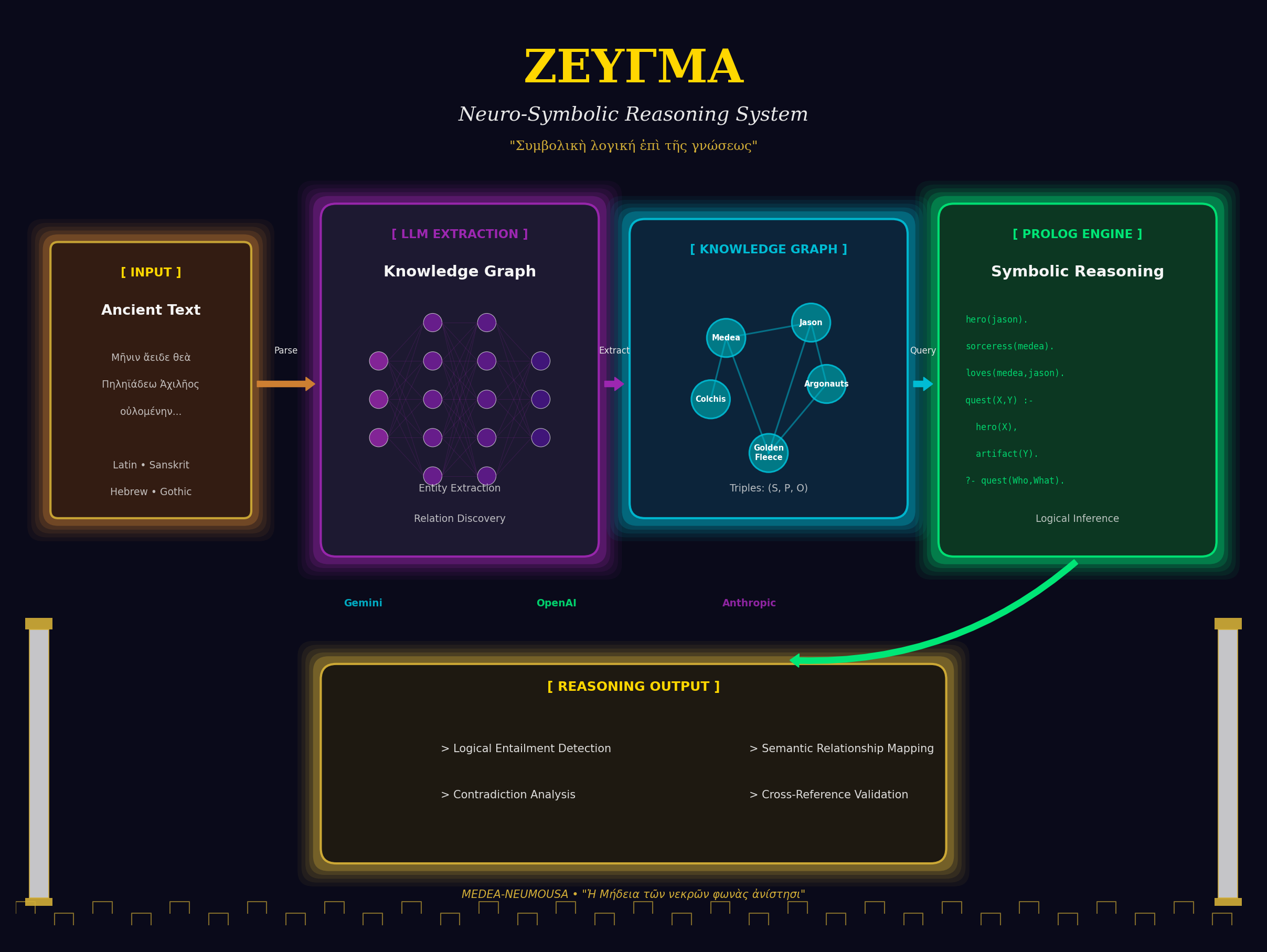

Zeugma

Combination of LLM extraction and ProLog reasoning for zeugma and copredication phenomena.

Major Projects

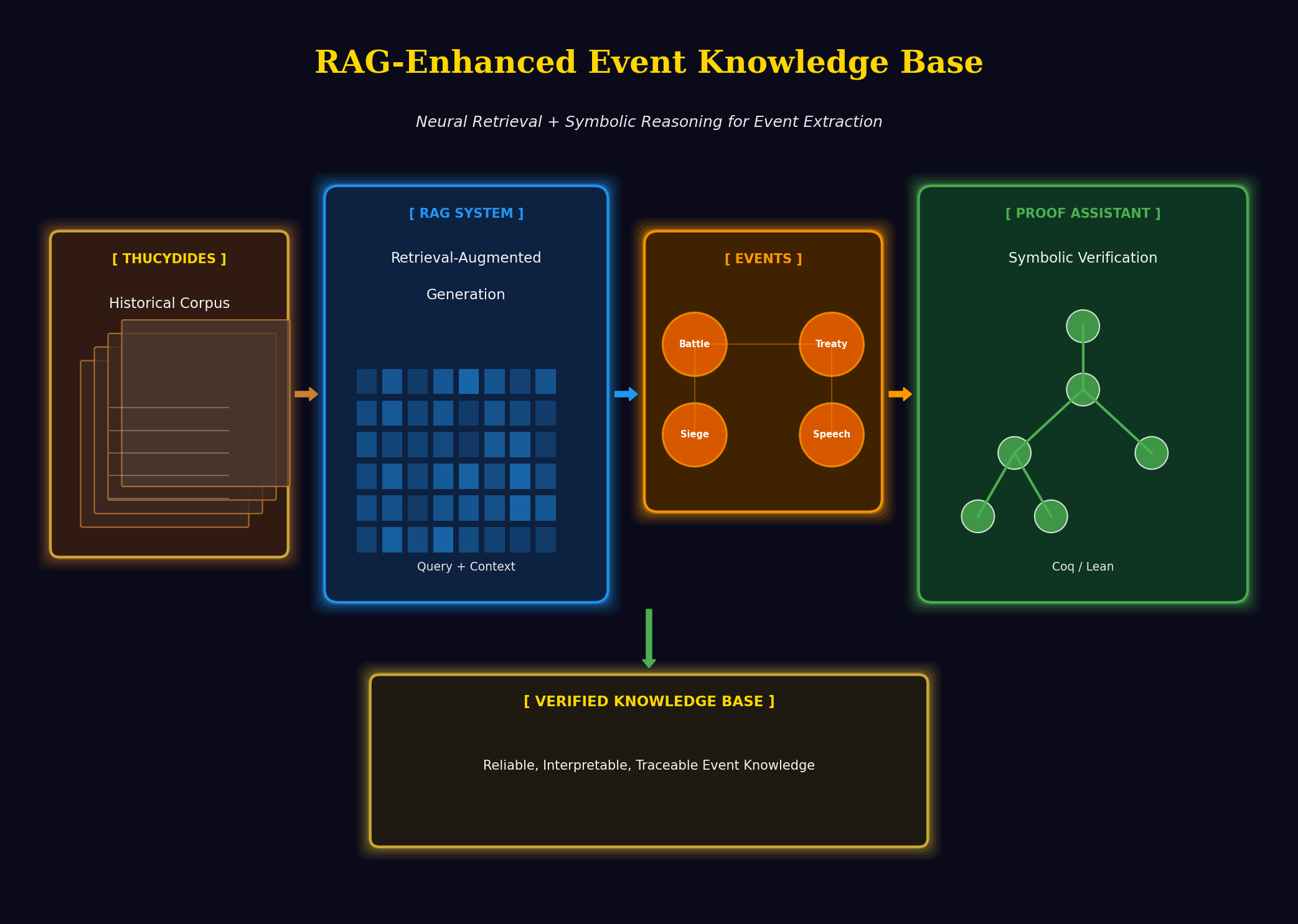

RAG-Enhanced Event Knowledge Base Construction

Our work on RAGged events combines retrieval-augmented generation with proof assistants for event knowledge extraction. This approach demonstrates how neural RAG systems can be enhanced with symbolic reasoning capabilities, creating more reliable and interpretable knowledge bases.

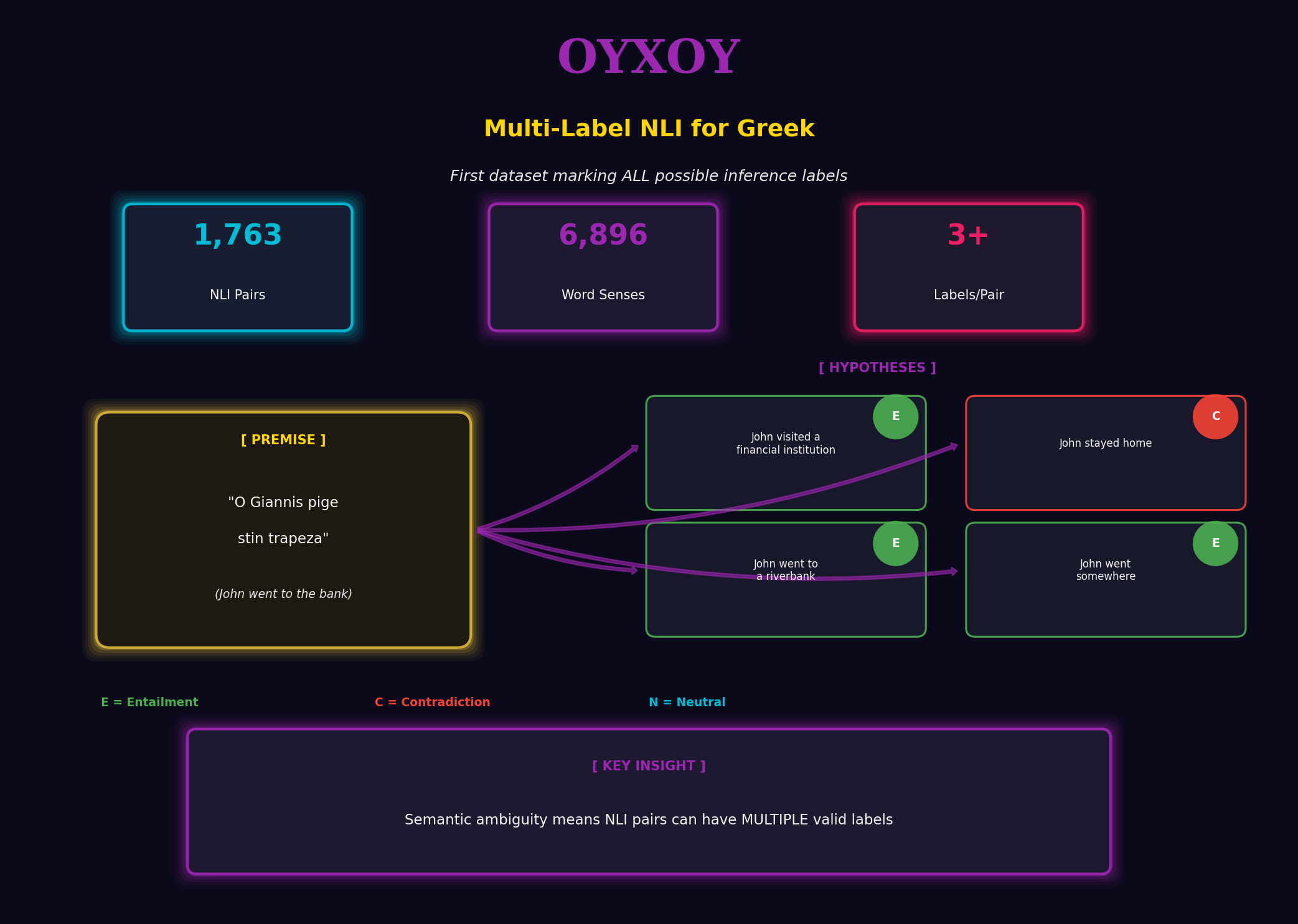

OYXOY: Multi-Label NLI for Greek

OYXOY is the first NLI dataset that marks all possible inference labels, accounting for semantic ambiguity. With 1,763 NLI pairs and 6,896 word senses, it challenges the assumption that NLI has single correct answers and enables research on fine-grained inference judgments.

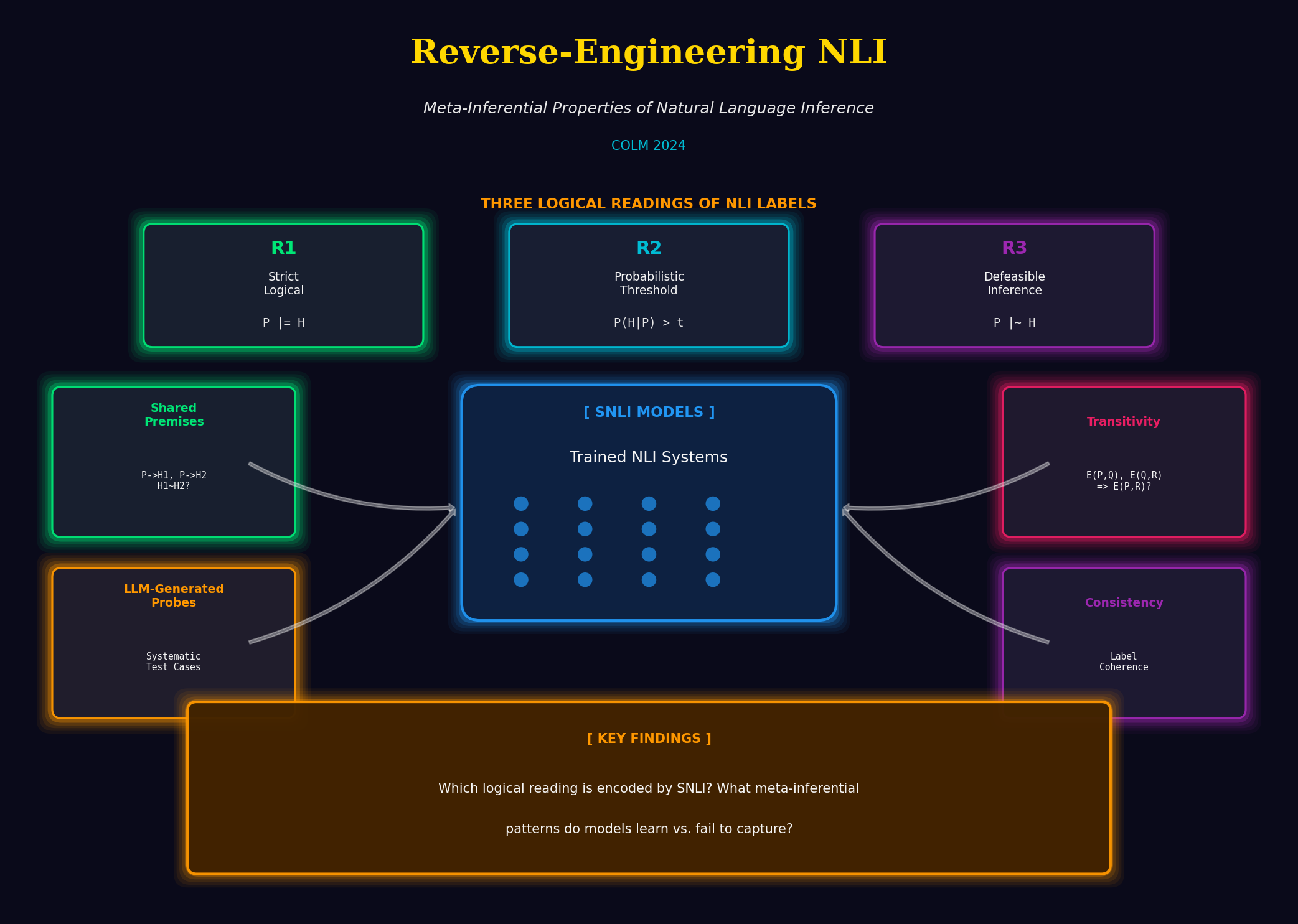

Reverse Engineering NLI

A theoretical investigation of the meta-inferential properties of natural language inference systems. This work examines what reasoning patterns NLI models can handle, revealing fundamental limitations and capabilities of current approaches.

Symbolic NLI Systems

Our symbolic NLI system achieves 81% accuracy on the FraCaS test suite (best performance by a logical system), demonstrating the power of type-theoretical semantics and proof assistants. This work shows how symbolic reasoning can complement neural approaches, providing explainable inference chains.

Neural-Symbolic Integration

Our research philosophy centers on neural-symbolic integration. We embrace neural approaches for their scalability and ability to learn from data, while recognizing that symbolic reasoning provides interpretability, precision, and the ability to handle low-resource scenarios.

Projects like Zeugma and RAGged events exemplify this integration: combining neural models (RAG, transformers) with symbolic components (proof assistants, type theory) to create systems that are both powerful and explainable.

This hybrid approach is the future of NLI: leveraging neural models for robust representation learning and symbolic systems for precise reasoning, creating AI that is both scalable and interpretable.

Selected Publications

Reasoning with RAGged events

Chatzikyriakidis, S. (2025)

RAG-Enhanced Event Knowledge Base Construction combining retrieval-augmented generation with proof assistants.

Reverse Engineering NLI

Blanck, R., Noble, B., Chatzikyriakidis, S. (2025)

Study of the meta-inferential properties of Natural Language Inference systems.

OYXOY: A Modern NLP Test Suite

Kogkalidis, K., Chatzikyriakidis, S., et al. (2024)

First multi-label NLI dataset marking all possible inference labels for Modern Greek.

Applied Temporal Analysis: FraCaS

Bernardy, J.P., Chatzikyriakidis, S. (2021)

81% accuracy on FraCaS test suite using type-theoretical semantics and proof assistants.